Comparison of CNN Method Architecturesin Arabic Sign Language Image Classification

Keywords:

Parallel processing, SQL injection attack, AhoCorasick algorithm, SQLI, Pattern Matching algorithmAbstract

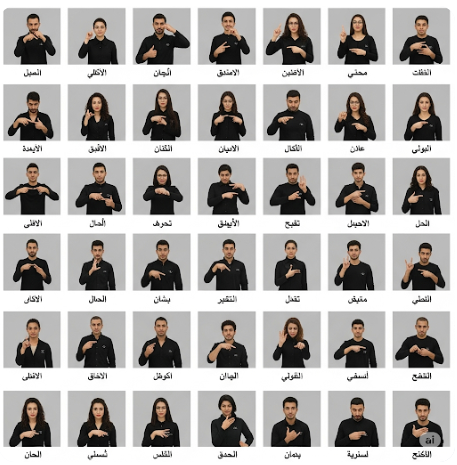

Sign language is an essential communication tool for people with disabilities, especially for deaf and speech-impaired people. Sign language allows people with disabilities to interact and participate actively in social and educational settings. This research compared several CNN method architectures, such as LeNet-5, AlexNet, and VGG-16, in Arabic Sign Language image classification to find the best architecture with the highest accuracy value. The dataset used in this research is the Arabic alphabet sign Language Dataset (ArASL), which consists of 47,876 images and is divided into 28 classes. This research's training and testing process uses K-fold cross-validation with a K-fold value = 5. The testing results are then evaluated using the Confusion Matrix to calculate and obtain the best accuracy value. The results of the research show that the average accuracy value obtained from each fold for the LeNet-5 architecture reaches a value of 97.38%, for the AlexNet architecture, it reaches an accuracy value of 97.96%, and for the VGG-16 architecture, it reaches an accuracy value of 98.17%. Based on the research results, it can be concluded that using VGG-16 architecture shows the best performance and is the most optimal choice in classifying Arabic sign language images on the ArASL dataset compared to LeNet-5 and AlexNet.

Downloads

Downloads

Published

Issue

Section

License

Copyright (c) 2024 International Journal of Sociology of Religion

This work is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License.