Comparative Analysis of Naive Bayes and K-Nearest Neighbors Algorithms for Customer Churn Prediction: A Kaggle Dataset Case Study

Keywords:

Customer churn, naïve bayes, k-nearest neigbors, machine learning, prediction, bankAbstract

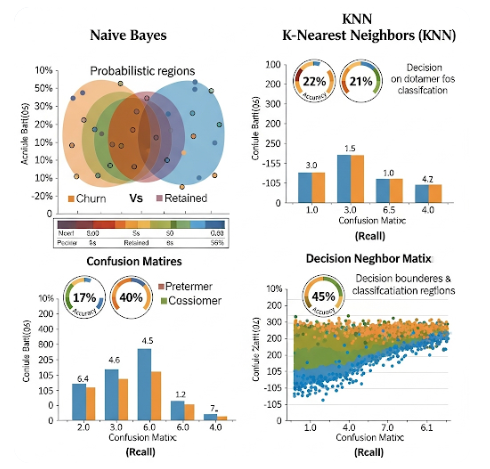

This research compares Naive Bayes and K-NN algorithms for predicting customer churn using a Kaggle dataset. The data preprocessing includes converting categorical variables and applying the SMOTE method for balanced data testing. Naive Bayes shows improved results on balanced data with SMOTE, while K-NN experiences a notable decrease in performance. Although K-NN maintains high accuracy (around 0.56), there are significant reductions in Precision, Recall, and F1-Score. Conversely, Naive Bayes on balanced data exhibits a decrease in F1-Score for the minority class ('exited') but maintains favorable performance. In conclusion, Naive Bayes is more robust to class imbalance than K-NN, especially with balanced data. The model choice depends on specific goals in addressing class imbalance. Further research should optimize KNN parameters for improved performance on imbalanced data, focusing on data scale and distribution variations.

Downloads

Downloads

Published

Issue

Section

License

Copyright (c) 2024 International Journal of Sociology of Religion

This work is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License.